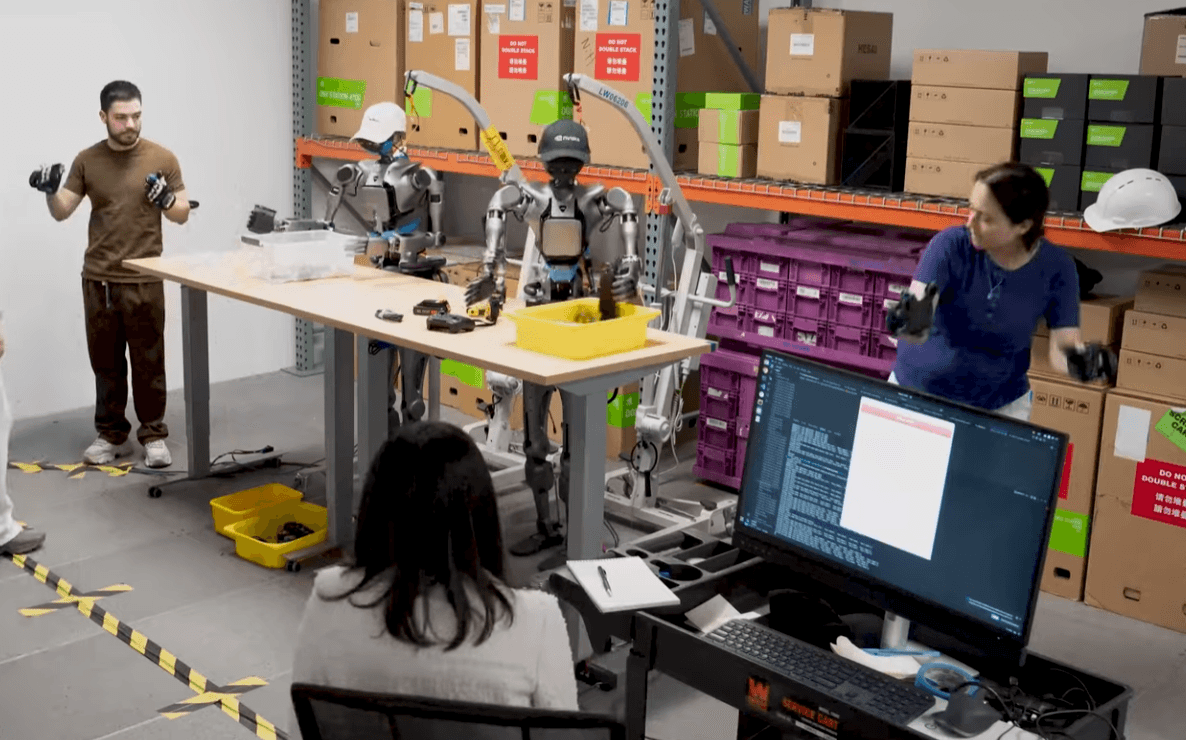

For a robot to move autonomously in the real world and perform tasks, it requires a massive amount of training data. However, collecting such data directly in the real world is expensive and time-consuming.

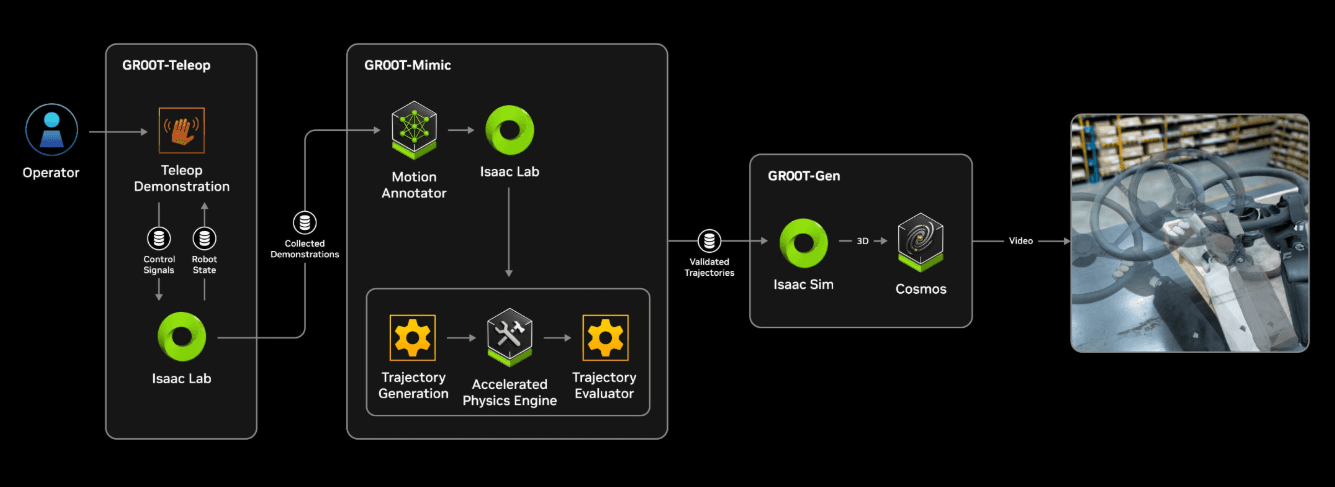

This is why NVIDIA presents the full pipeline of the GR00T ecosystem, which covers automatic generation, augmentation, training, and inference of robot-learning data.

This document summarizes how GR00T-Dreams and GR00T-Mimic differ, and how each generates training data that real robots can use.

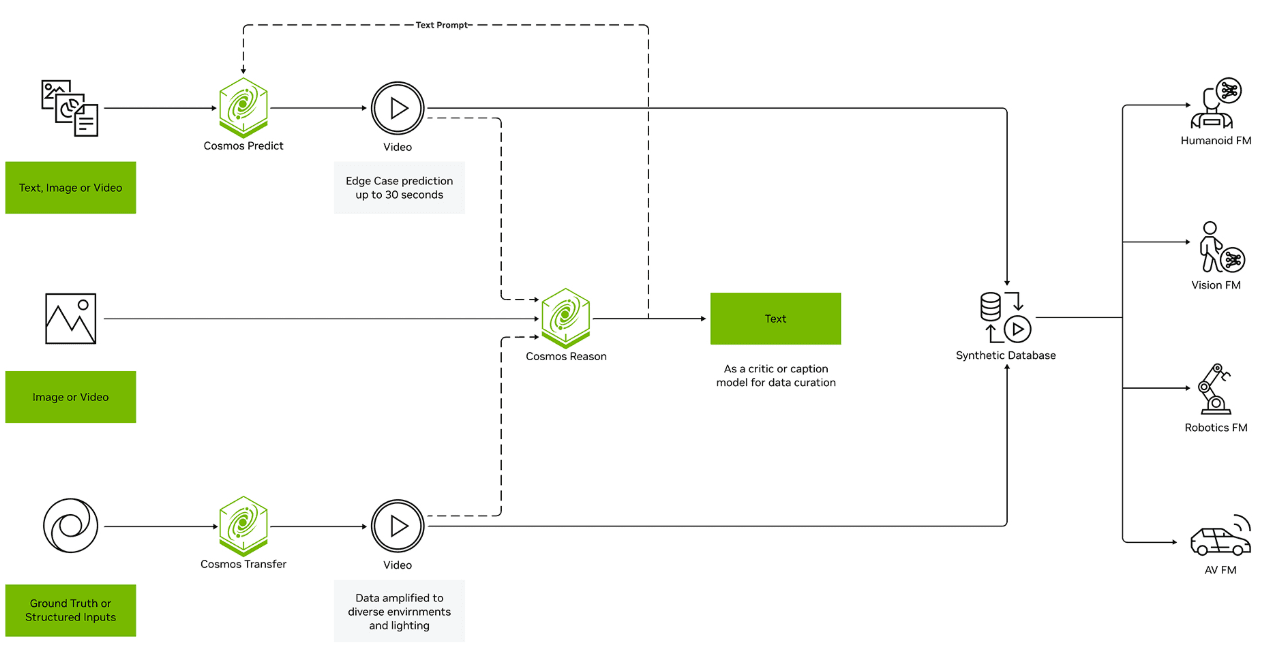

GR00T is not a single model but an ecosystem of multiple components that together form the full robot-learning pipeline.

GR00T Ecosystem Components

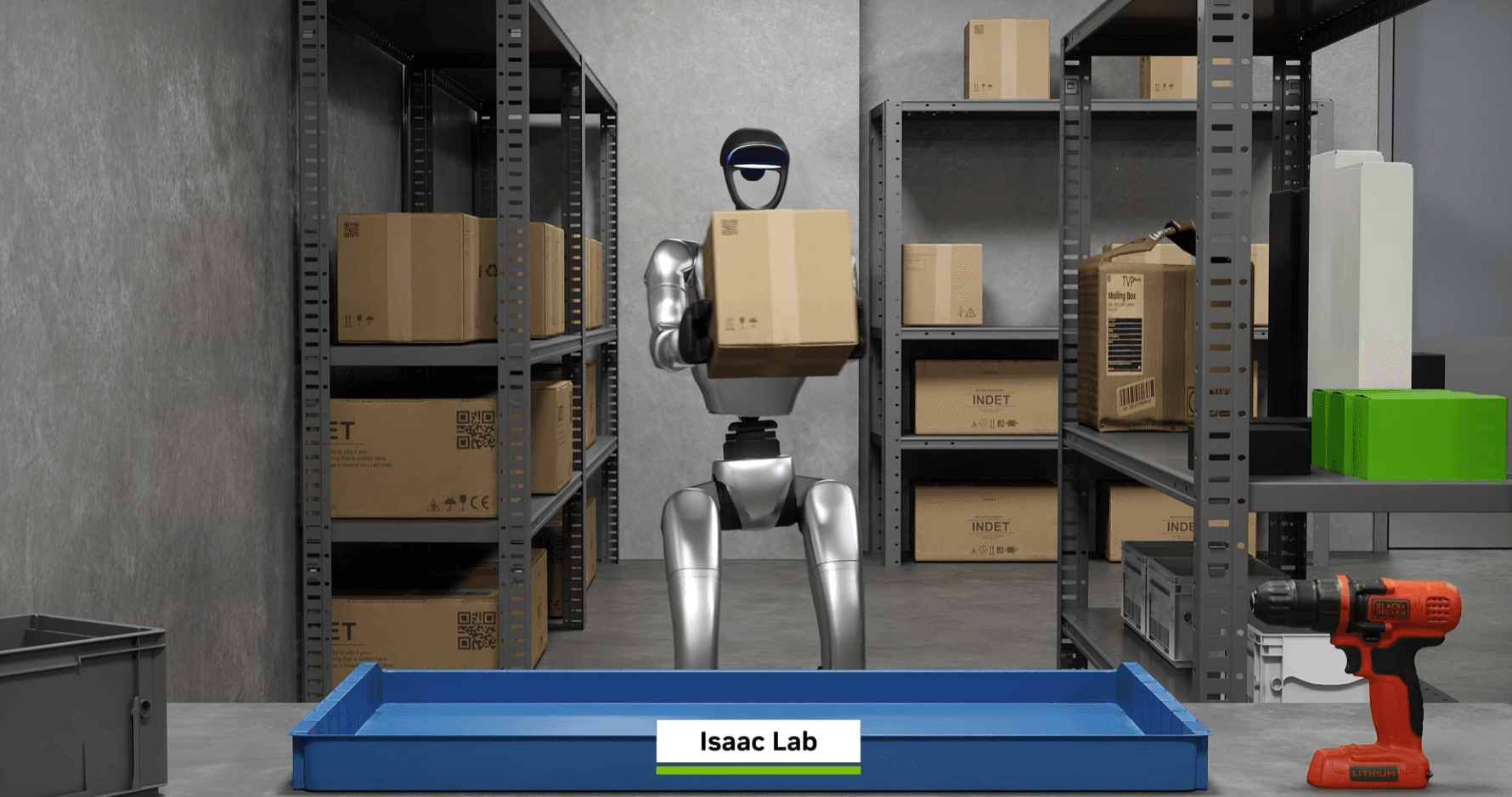

In short, Dreams and Mimic handle data generation, Isaac Lab handles learning, and RFM handles execution.

Concept

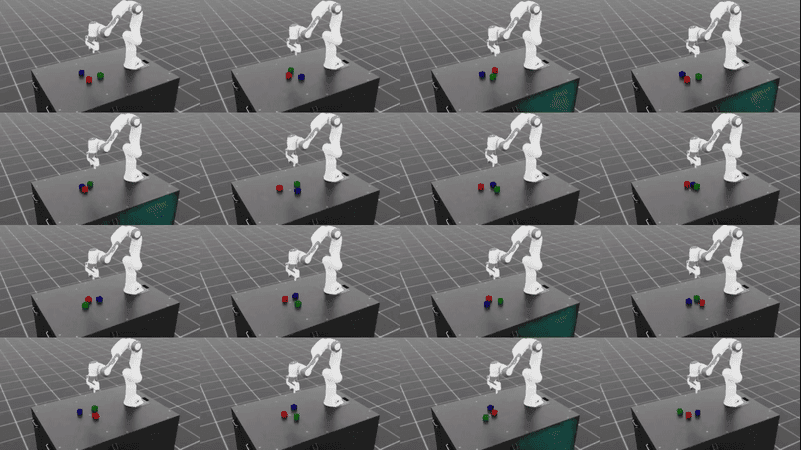

Mimic is literally a pipeline that imitates, transforms, and expands existing demonstration data.

Its inputs include:

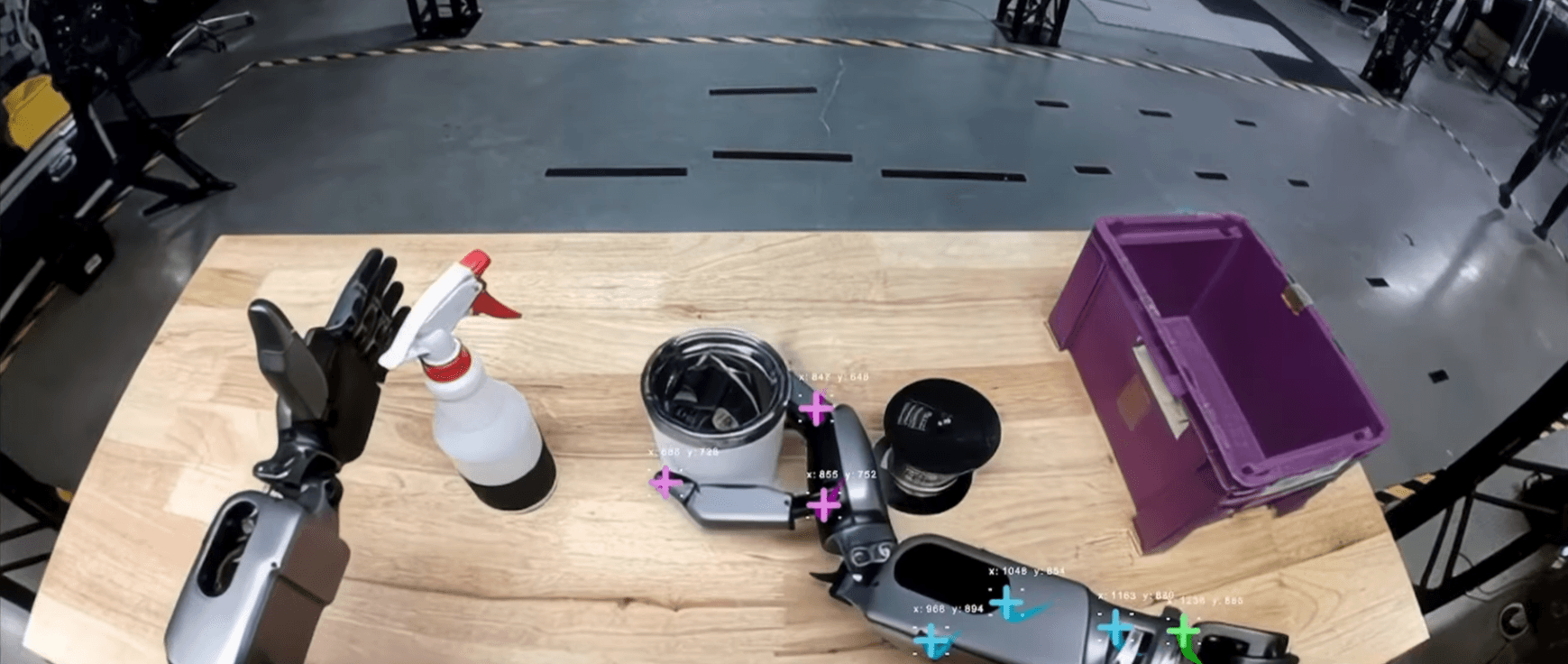

By altering environments, lighting, object placement, motion speed, or object states, Mimic generates large volumes of new training data.

Features

Summary

Mimic is optimized for “mastering known tasks.”

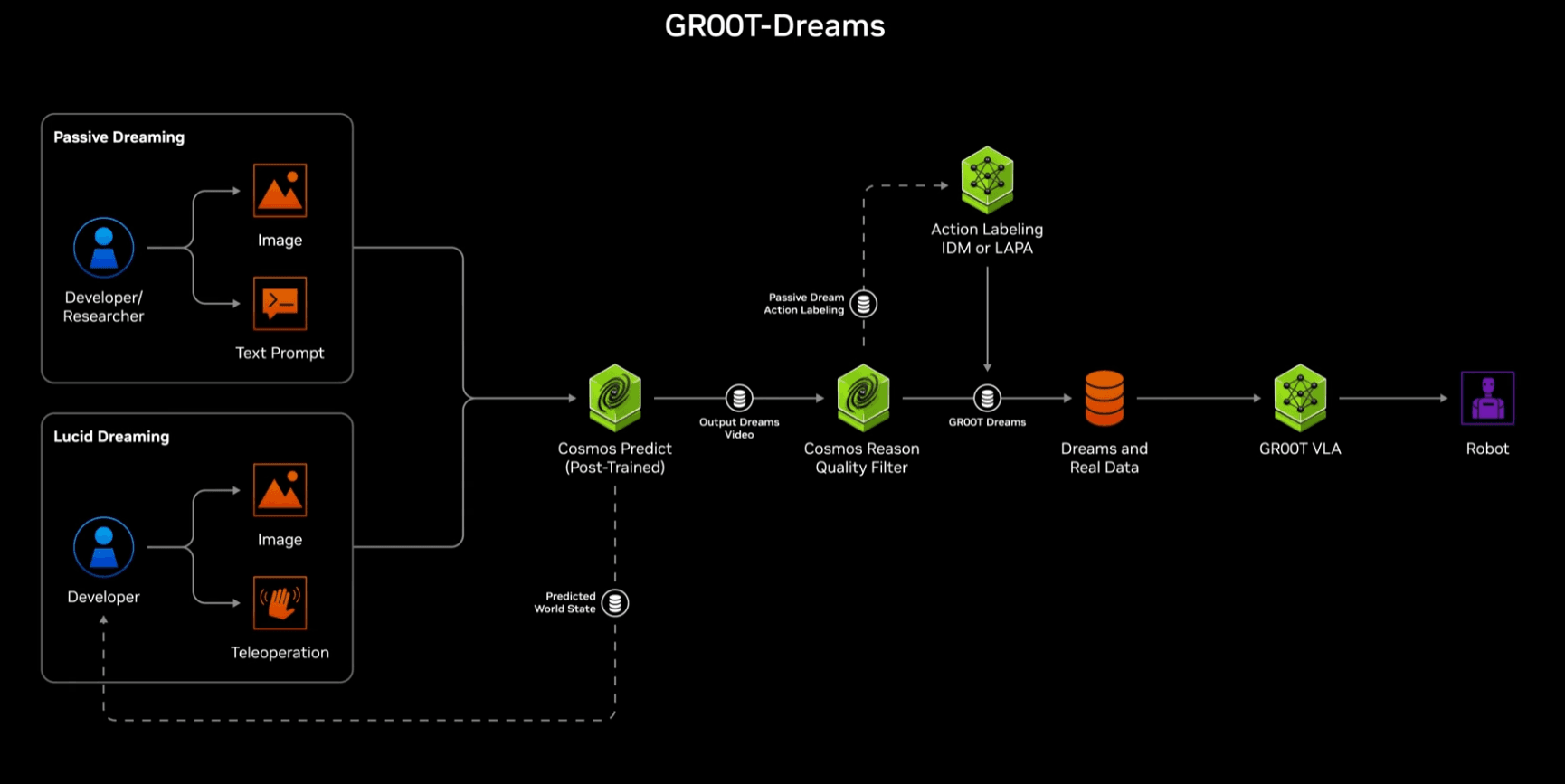

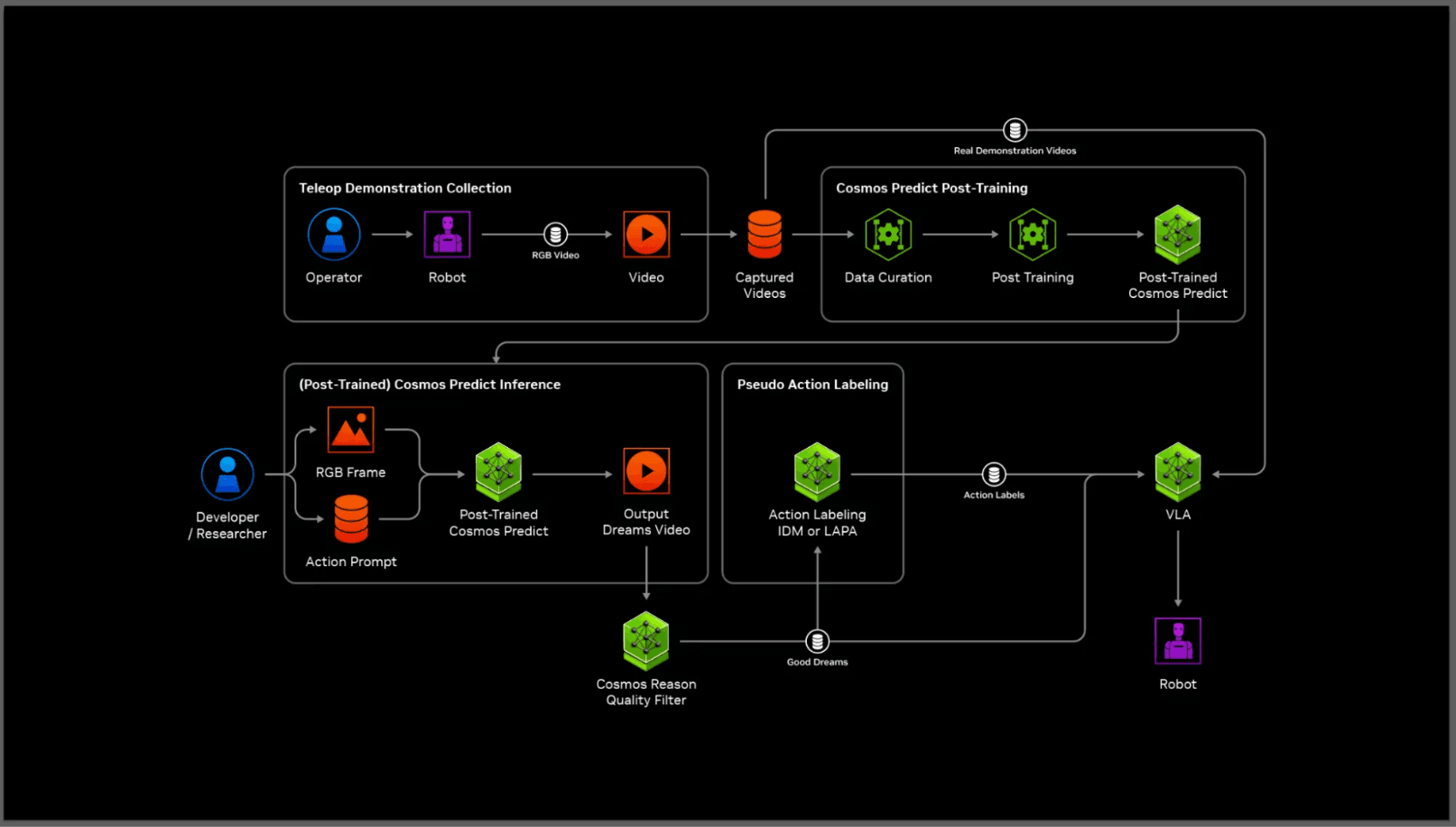

Concept

Dreams creates entirely new task scenarios from scratch.

It can start from extremely minimal input, such as:

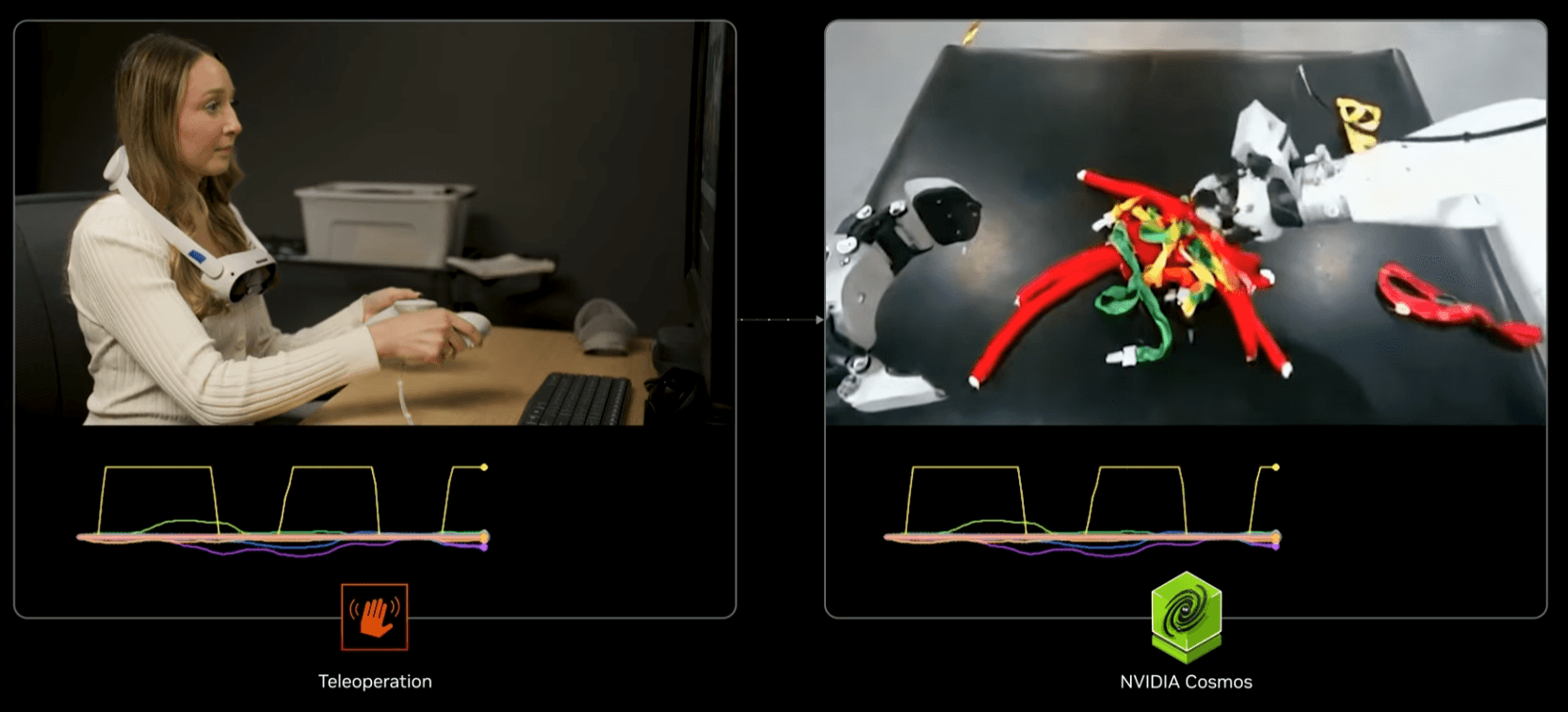

Dreams generates human action videos from these minimal inputs, and Cosmos analyzes those videos to convert them into robot-learnable trajectories.

Key Features

Many people think “Dreams is simply a video generator.”

However, the real value lies beyond the video.

After generating video, Dreams includes the full pipeline to convert it into structured robot-training data.

In other words, Dreams performs the full transformation:

video → 3D pose → robot trajectory → physics-based torque.

This is where Dreams’ technical strength is best revealed.

① DreamGen: Action Video Generation

② Cosmos Predict/Reason: 3D Pose Reconstruction

From each video frame, Cosmos extracts:

Pixel-based video is transformed into structured 3D motion data.

③ Retargeting: Human Motion → Robot Joint Space

Human 3D motion is converted into the robot’s joint space (q, qdot), respecting:

At this stage, Action Tokens (robot action representations) are generated.

④ Inverse Dynamics: Reconstructing Physical Quantities

To execute motions in the real world, the robot needs physics values such as:

As a result, Dreams generates complete trajectory data ready for immediate policy training.

| Category | GR00T-Mimic | GR00T-Dreams |

|---|---|---|

| Starting Point | Existing demonstrations | Minimal input (text/image/short demo) |

| Purpose | Skill refinement (known task) | Generalization (novel task) |

| Approach | Data augmentation | Generating new scenarios |

| Technology | Isaac Sim + Cosmos-Transfer | DreamGen + Cosmos Predict/Reason |

| Output | Variations of existing tasks | New task trajectories & physics data |

Dreams and Mimic serve different purposes and use different technologies, yet both play critical roles in generating robot-training data.

Training Humanoid Robots With Isaac GR00T-Dreams

https://www.youtube.com/watch?v=pMWL1MEI-gE

Teaching Robots New Tasks With GR00T-Dreams

https://www.youtube.com/watch?v=QHKH4iYYwJs

GR00T: NVIDIA Humanoid Robotics Foundation Model

https://www.youtube.com/watch?v=ZSxYgW-zHiU

Isaac GR00T-Mimic: Isaac Lab Office Hour

Share this post: