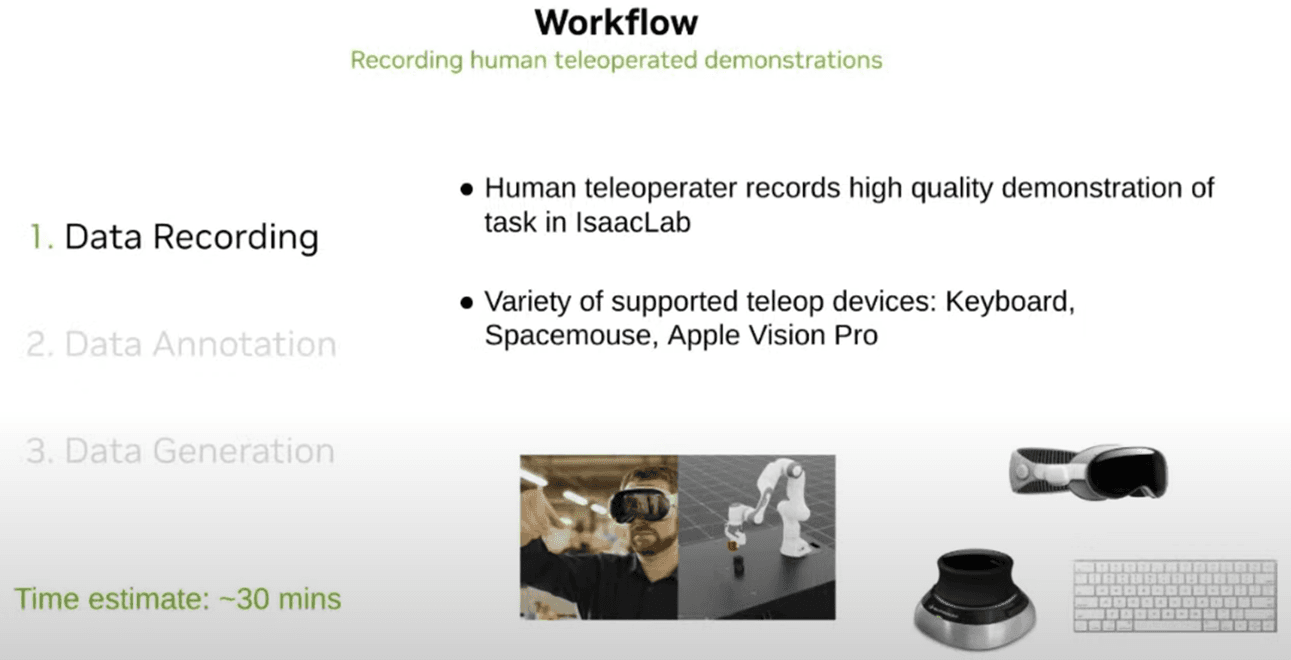

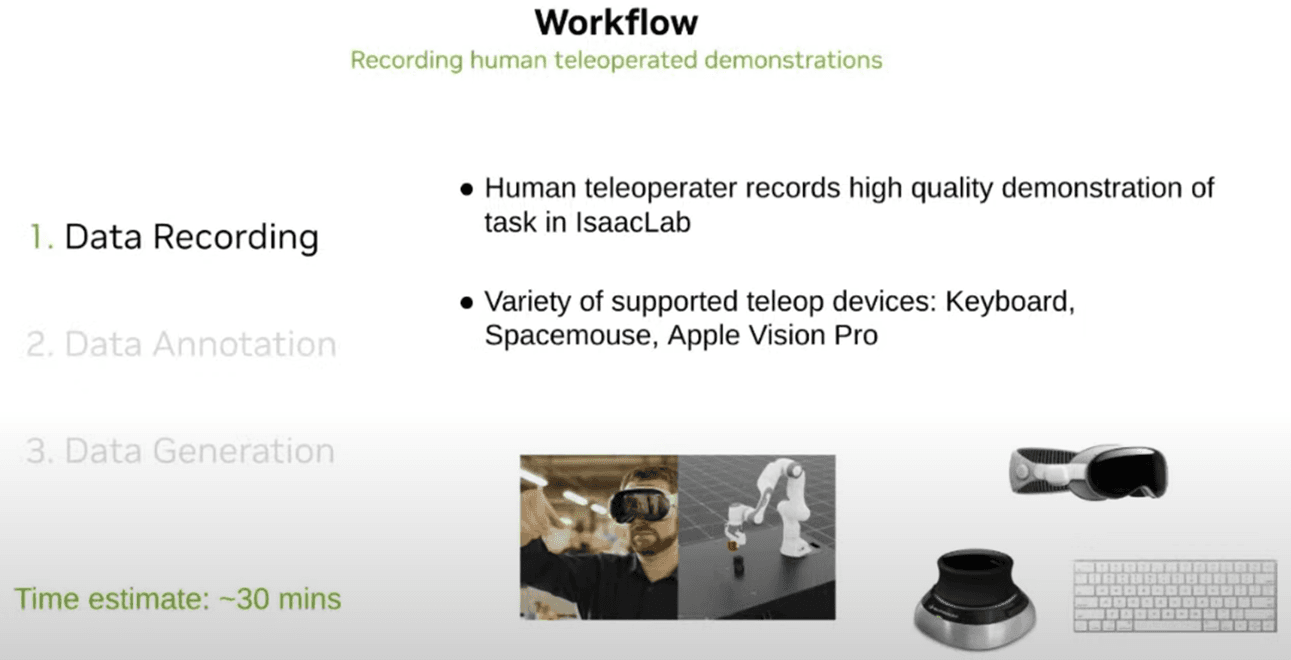

GR00T-Mimic Workflow: Step 1 – Data Recording

Key Message

The GR00T-Mimic workflow begins by collecting a minimal number of human teleoperation demonstrations. These demonstrations serve as the foundation for generating large-scale training data for robot learning in an efficient and scalable way.

Summary

- Data Recording Process

- A human operator remotely controls the robot, and the demonstration data is recorded in Isaac Lab.

- This teleoperated data forms the foundation for robot learning.

- Various Teleoperation Interfaces Supported

- Supports multiple input devices such as keyboard, SpaceMouse, and Apple Vision Pro.

- Other control interfaces can also be easily integrated within Isaac Lab.

- Generate Large-Scale Training Data from Minimal Demonstrations

- Typically, only 5–20 demonstrations are required to generate up to 1,000 training trajectories.

- Initial data collection can be completed in approximately 10–30 minutes by a skilled operator.

- Key Advantages

- Minimizes the human teleoperation workload

- Easily adaptable to various robots and environments

- High-quality initial data enables large-scale learning

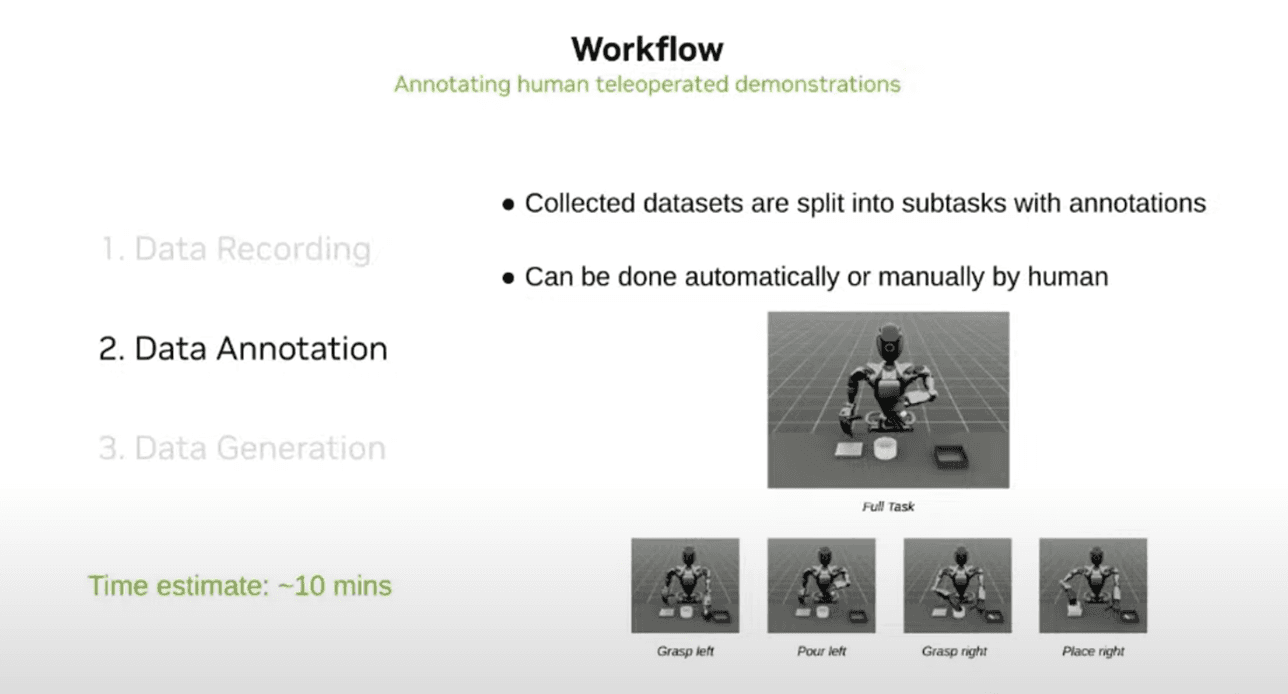

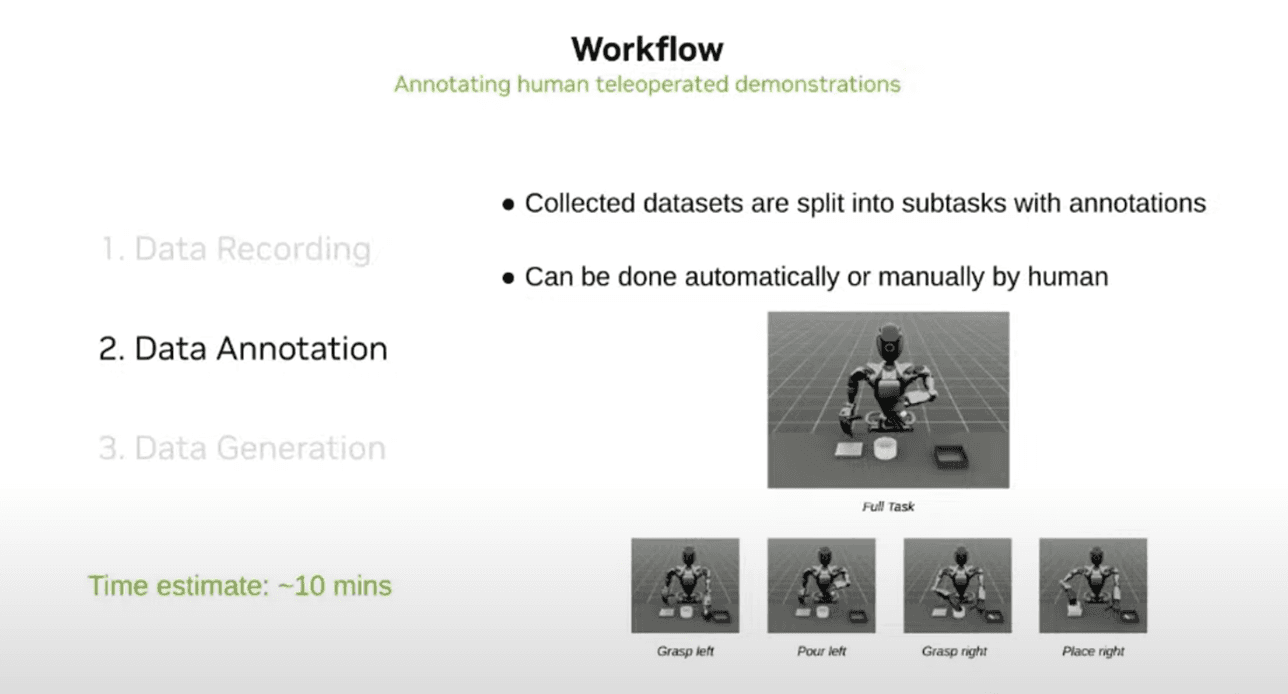

GR00T-Mimic Workflow: Step 2 – Data Annotation

Key Message

Data annotation is the process of dividing the teleoperation demonstrations into learning-friendly subtasks. This can be done manually or automatically and is a critical step for converting raw human demonstrations into structured training data.

Summary

- Segmenting Teleoperation Datasets

- Teleoperated demonstrations are segmented by subtasks.

- Examples: Grasp, Pour, Place — separated based on robot action boundaries.

- Automation vs. Manual Annotation

- Simple tasks can be automatically segmented.

- Complex tasks are recommended to be annotated manually.

- Time Required

- If the number of demonstrations is small, annotation typically takes less than 10 minutes.

- Key Advantages

- Converts demonstration data into a format suitable for robot learning

- Increases training efficiency and ensures data quality

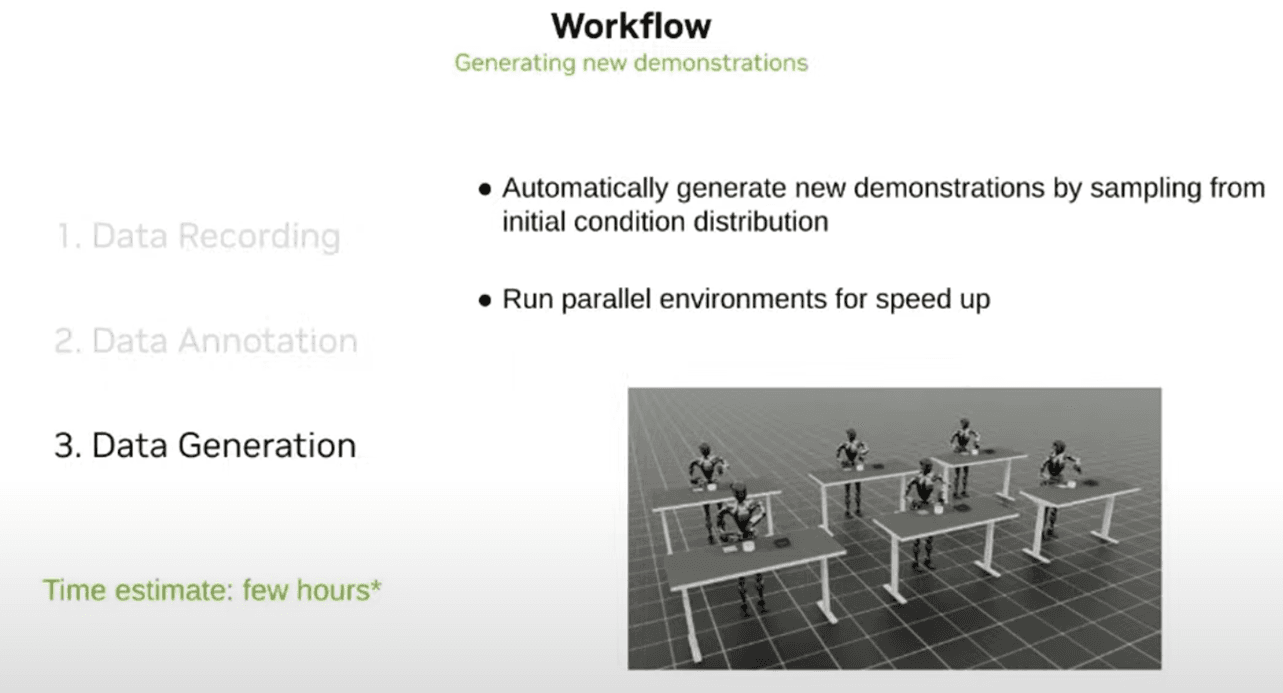

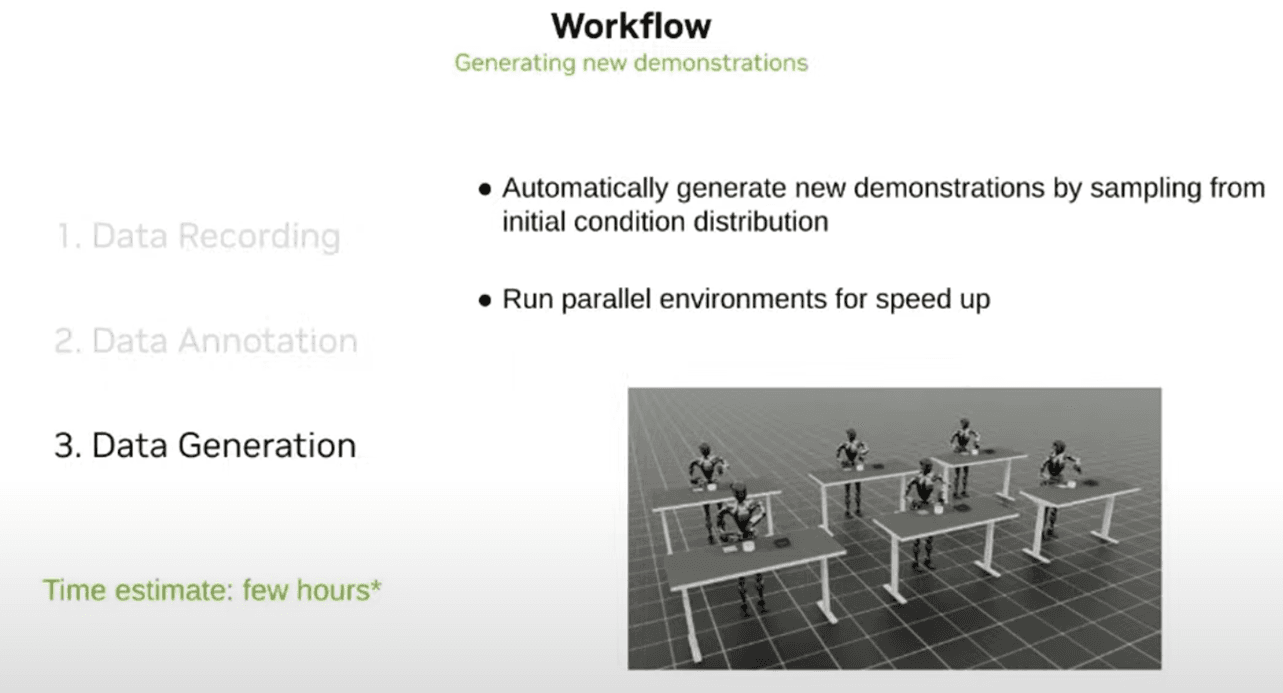

GR00T-Mimic Workflow: Step 3 – Data Generation

Key Message

The data generation phase is the core process in which new robot training data is automatically generated based on teleoperation demonstrations. Using Isaac Lab’s parallel simulation capabilities, large datasets can be generated quickly, significantly improving learning speed and efficiency.

Summary

- Automated Data Generation

- New robot motion data is generated automatically in various situations and environments based on teleoperation demonstrations.

- By changing initial conditions, diverse demonstrations can be produced.

- Parallel Simulation Support

- Dozens to hundreds of environments can run in parallel within Isaac Lab.

- Enables large-scale data generation for the same task, dramatically speeding up learning.

- Time Efficiency

- Compared to generating one dataset sequentially, parallel processing can be over 100× faster.

- Key Advantages

- Rapid and scalable dataset creation

- Supports complex and diverse task/environment learning

- Essential for efficient robot training

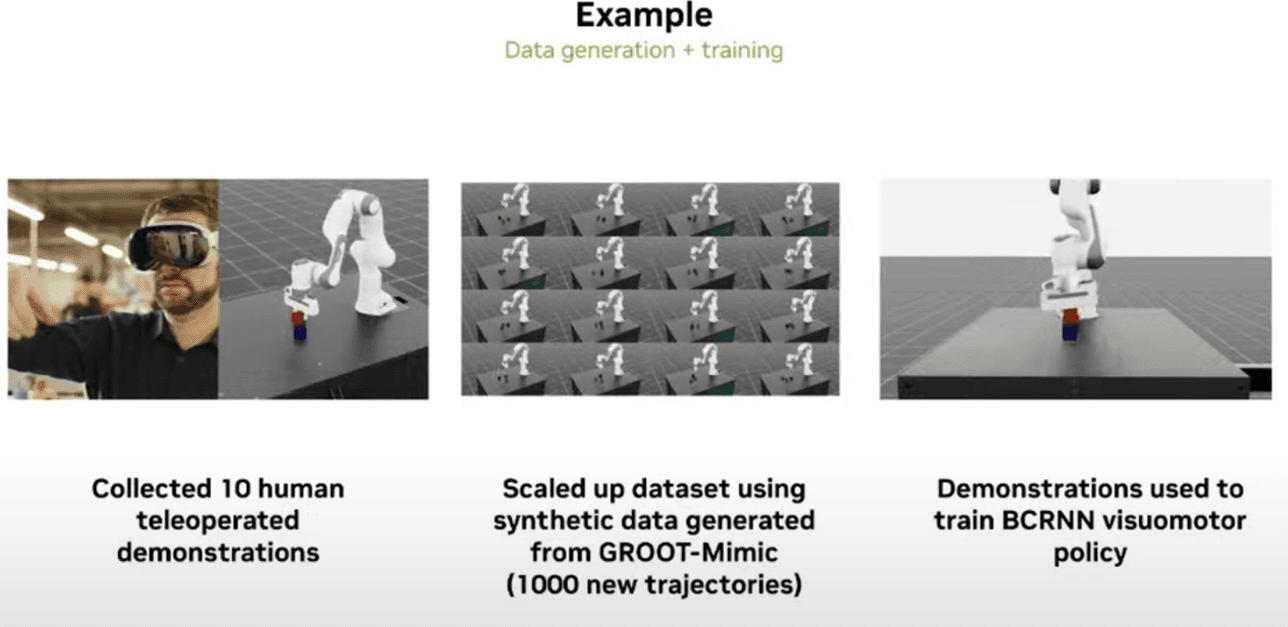

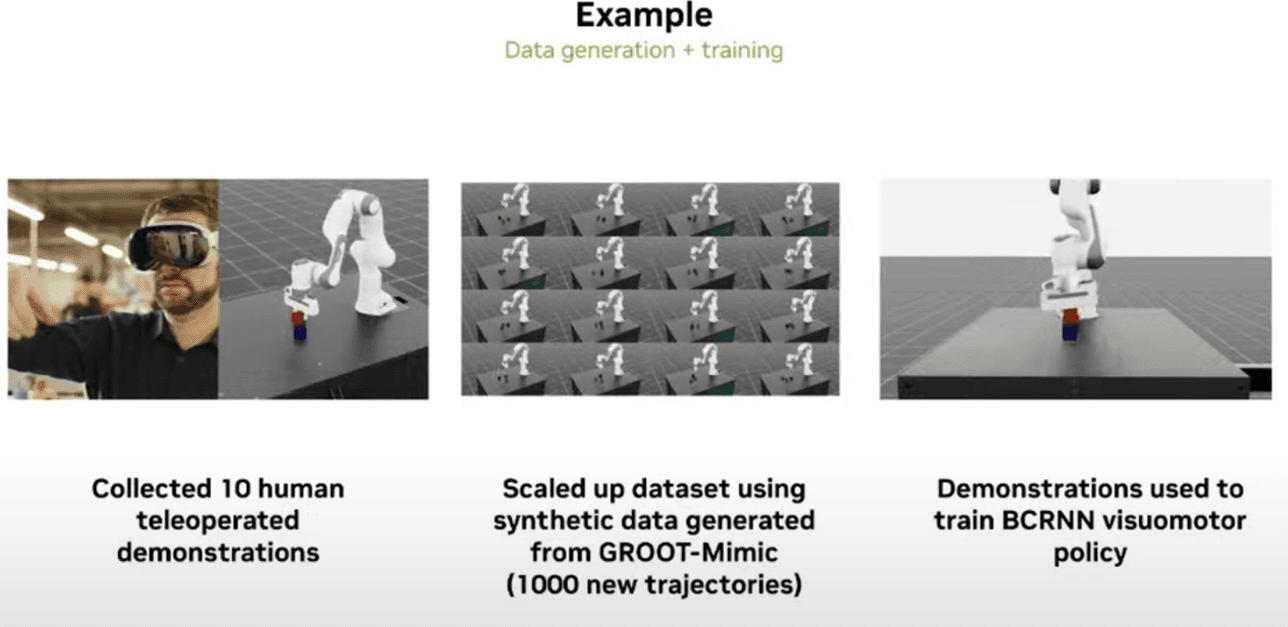

GR00T-Mimic Workflow Example: Data Generation + Learning

Key Message

Using the GR00T-Mimic workflow, a small number of human demonstrations can be expanded into a large training dataset (e.g., 1,000 trajectories), enabling robots to successfully learn visuomotor tasks such as block stacking.

Summary

- Collecting Teleoperation Demonstrations

- A human operator performs a task (e.g., stacking blocks) 10 times through teleoperation.

- These demonstrations become the foundation of robotic learning.

- Large-Scale Training Data Generation

- Using GR00T-Mimic, 10 demonstrations are expanded into 1,000 synthetic trajectories.

- Enables large-scale training with minimal human effort.

- Robot Learning and Performance Verification

- The generated data is used to train a BCRNN visuomotor policy.

- The robot learns and successfully performs the original task (e.g., stacking blocks).

- Key Advantages

- Minimal human demonstrations → large-scale training data

- High efficiency, fast training cycles, scalable learning

- Entire workflow (data generation, learning, validation) is completed within Isaac Lab

What is BCRNN? (Behavior Cloning Recurrent Neural Network)

- A model combining Behavior Cloning (learning from human demonstration) and RNN (Recurrent Neural Network for sequential data).

- Suitable for learning continuous robot action sequences such as “grasp → move → place”.

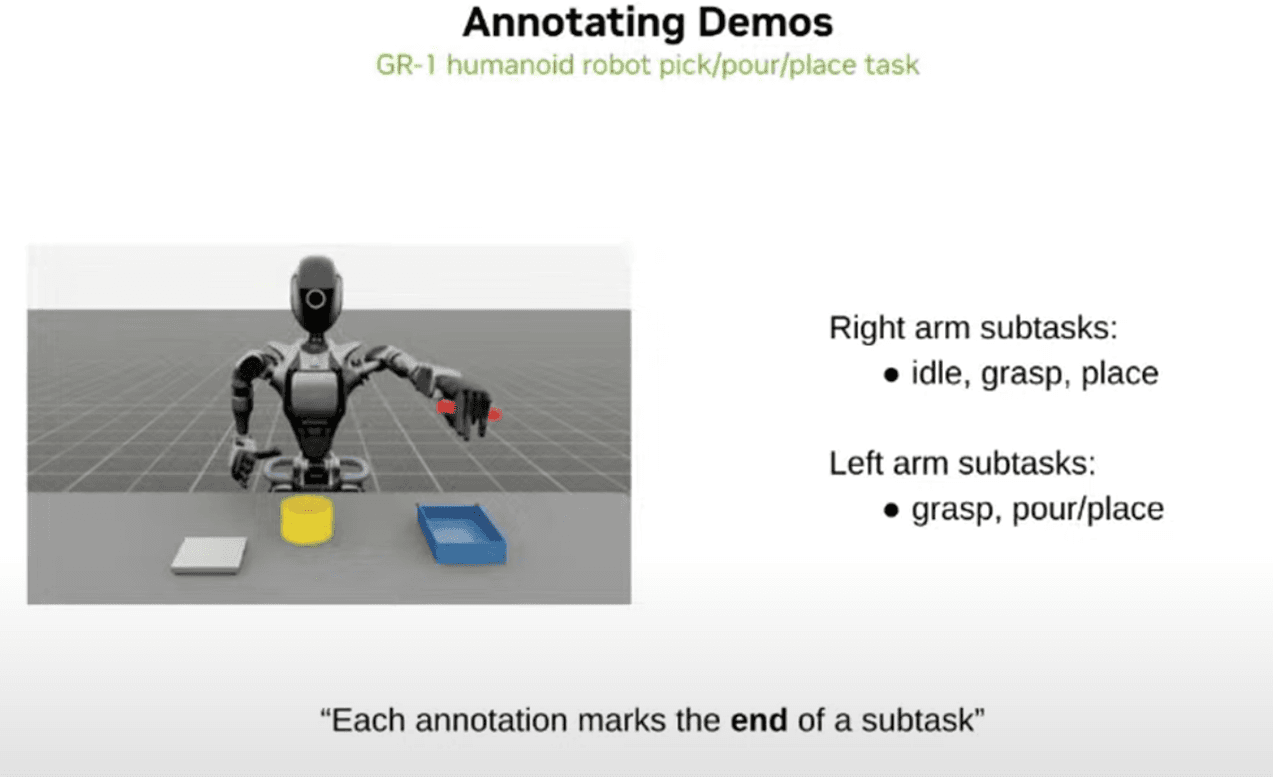

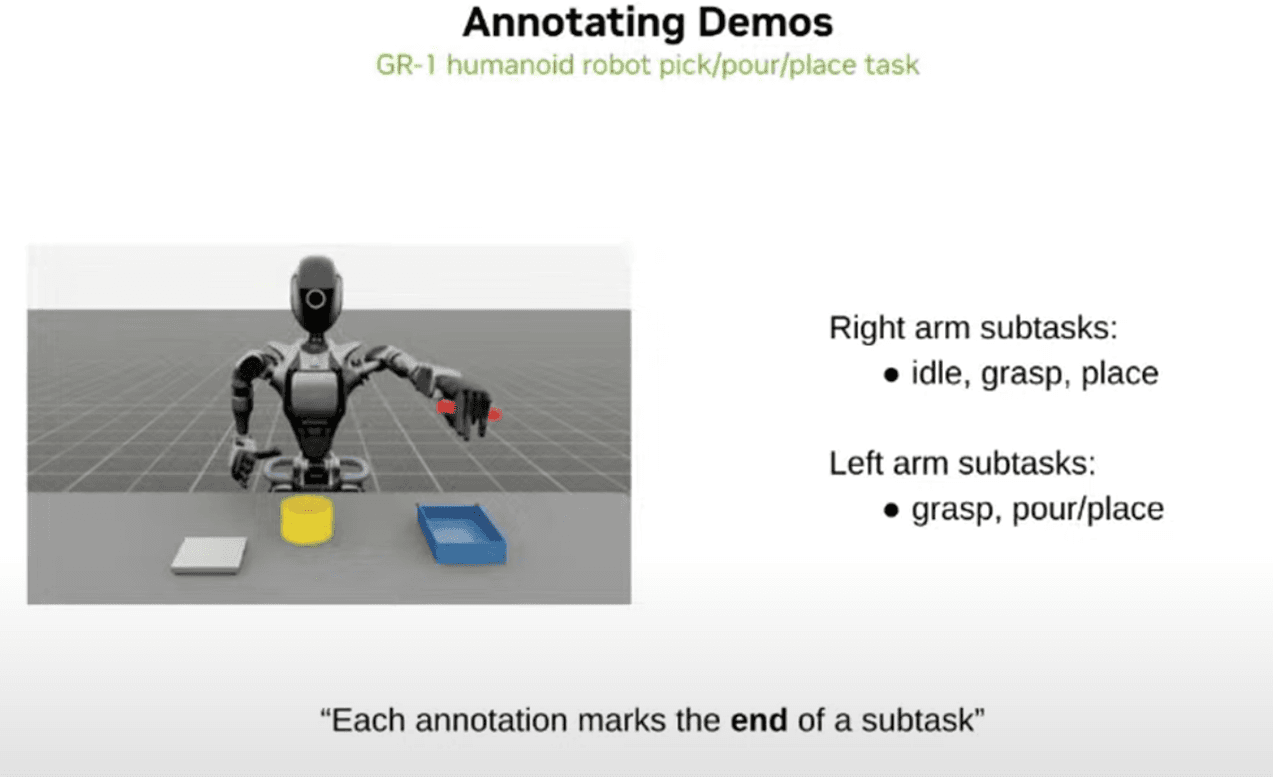

GR00T-Mimic Workflow Example: GR-1 Humanoid Robot Task Annotation

Key Message

Using the GR-1 humanoid robot as an example, the GR00T-Mimic workflow defines robot tasks (e.g., grasping, stacking, pouring) in terms of subtasks, and marks the end time of each subtask to generate structured training datasets.

Summary

- Example Task: GR-1 Robot Performing Grasp, Stack, Pour

- The robot performs different actions with both arms.

- Right arm: Idle → Grasp (yellow cylinder) → Pass to left arm

- Left arm: Grasp (red beaker) → Pour → Move to blue container

- Defining Subtask Boundaries

- Each task is divided into “start and end moments”.

- E.g., end of right-arm idle, end of grasp, end of pouring.

- Core Principle: Define Based on “End Moment”

- When annotating data, the moment a subtask ends is most important.

- These marked end points are critical for training and trajectory generation.

- Key Advantages

- Even complex tasks can be clearly defined by subtasks

- Enables intuitive and structured robot training data creation